Ideal Attributes for Data Preservation

Below are ideal attributes to preserve data during the data archival process.

- File-based storage

- Allows for the management of any type of data (structured, un-structured, hybrid)

- Facilitates the preservation of the legacy data’s original structure

- Maintains referential integrity

- Highly compressible

- Light infrastructure footprint

- Flexible storage options

- Metadata-driven

- Robust compliance and governance

- Flexible and responsive access to data

Current Archival Standards

Current archival standards use XML or JSON formatting to store data. There are several distinct challenges with using these formats for data preservation:

- XMLs are open formats that don’t force the need for metadata and have little control on the relationship management of data.

- XMLs do not support all character sets that are required in an archive.

- XML is not compression friendly and causes bloating of the data (sometimes heavily).

- XML is not the most secure format for storing data.

Some archival systems use a RDBMS (relational database management system) such as SQL. This categorizes the archive as an application, not as a true archive, and we will not compare those in this brief.

Apache Parquet as a Solution

What is Apache Parquet?

Apache Parquet is an open-source columnar data storage format to read and write data in a Hadoop Ecosystem that supports predominantly all data types in Hive, MapReduce, Pig, Spark SQL. Parquet format is most commonly used for big data processing as it is a binary file that contains metadata about the content stored. It uses Google’s record-shredding and assembly algorithm and is optimized to work with complex data in bulk, like in decommissioning and archival applications.

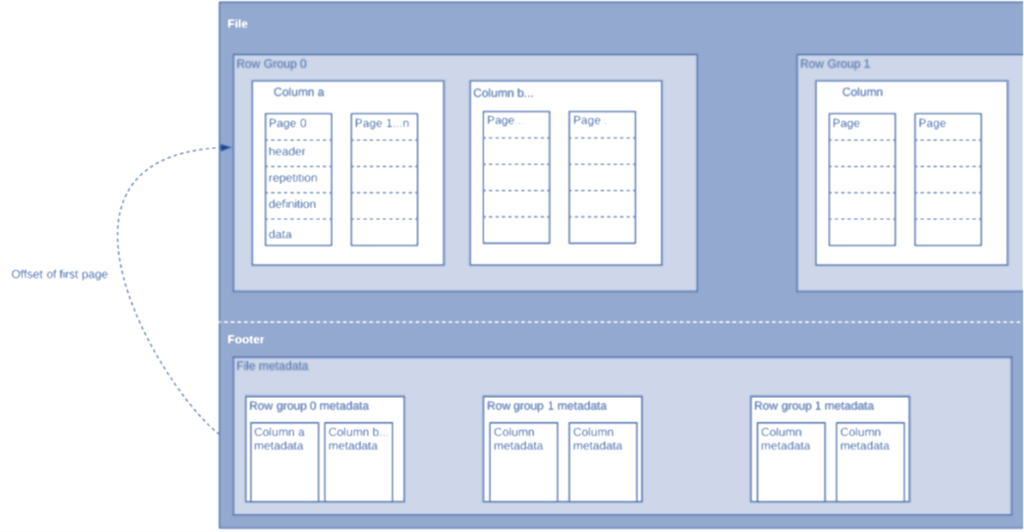

Parquet File Structure

Parquet files store data in a column structure instead of as a row as outlined below.

The file structure contains:

- Header – contains a magic number “PAR1” (4-byte) that identifies the file as a Parquet format file.

- Content/Body – Contains column chunks of data.

- Footer – Contains File metadata, Length of file metadata, Magic number.

Parquet File Features

Compression mechanism, a column or row-based data analytical approach, schema evolution, and performance are the main file features of Parquet.

Compression Mechanism

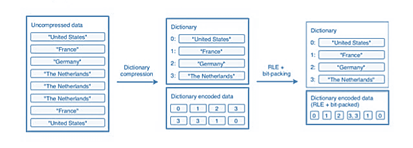

Organizing by column allows for better and flexible compression as we store data of the same type in each column. It also allows implementing different encoding methods such as, Bit packing, Run Length encoding and dictionary encoding for each column.

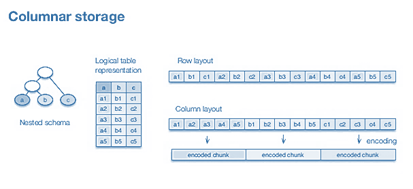

Column or Row Based Data Analytical Approach

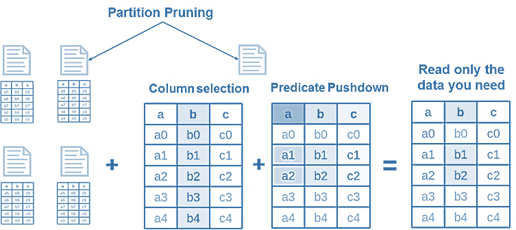

Parquet files store data in column structures rather than in row-based structures like CSV and JSON, which increases the speed and performance of data processing. Since data is stored in a column structure, when querying for a record(s) the irrelevant fields can be skipped, thus drastically reducing data retrieval time.

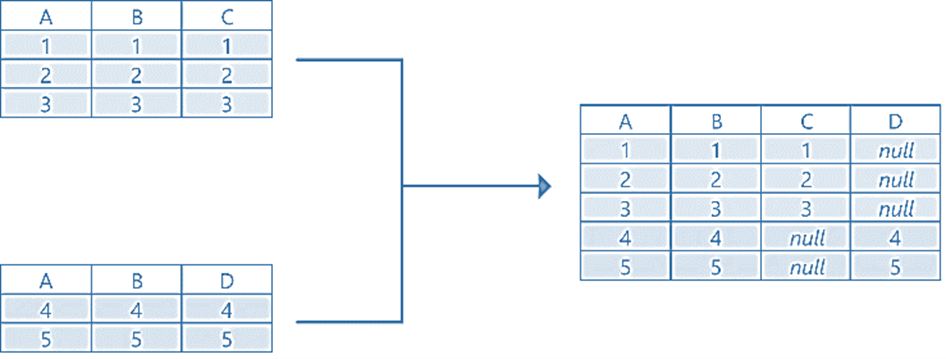

Schema Evolution

Organizing by column allows for better and flexible compression as we store data of the same type in each column. It also allows for implementing different encoding methods like Bit packing and Run Length encoding and dictionary encoding for each column.

Performance

In a Parquet-based file system only relevant data is focused on during data processing. The amount of data scanned will be smaller and will result in less I/O usage that reduces the storage cost and overall processing time.

Parquet files are most supportive in handling the following:

- Any application that needs faster data processing with reduced footprints (archival or similar)

- Big data analytics

- Business intelligence

The table below shows the primary advantages to consider Parquet over other traditional file storage systems available.

| Features | Parquet | Other File Formats |

|---|---|---|

| Storage Technique | Parquet’s files stores data in a column format that reduces the I/O usage exponentially. | CSV, JSON, Avro, and other common formats use a row-based storage technique that increases the scanning and retrieval time. |

| Scanning Mechanism | Parquet will first find only the relevant “data blocks” based on the filter criteria and only aggregate the rows/columns that match the filter criteria. | Other common file formats read the entire row of records to identify the required column. |

| Text vs. Binary | Parquet stores data in binary format. | CSV, JSON, and other most common formats stores data as plain text. |

| Splitable | Parquet files can be split or merged and processed accordingly. | JSON files can be split and processed individually. |

| Metadata Information | Parquet stores metadata in the footer. While reading a parquet file, an initial seek will be performed to read the footer metadata length and then a backward seek will be performed to read the footer metadata. Hence, blocks can be easily located and processed in parallel. | CSV and JSON store its metadata value in header sync markers and are used to separate blocks that make it tedious. |

| Self-Explanatory | Parquet files store the metadata with schema, version, and structure in each file. | CSV and JSON do not have a self-explanatory metadata detail stored. That makes it quite difficult to understand the type of content present. |

| Storage Cost | Platforms like AWS will charge based on the amount of data scanned per query. Parquet has reduced the storage requirements more than 30% on large datasets reducing the overall cost. | Since other common file format systems uses row-based storage, a data scanning approach and no appropriate compression mechanism, the data processing cost is higher. |

To summarize, compared to other file format systems based on the above features, the Parquet files reduce the number of footprints in data scanning, and thus, make the best option for decommissioning and archival systems.

| Types | CSV | JSON | XML | AVRO | ORC | Protocol Buffers | Parquet |

|---|---|---|---|---|---|---|---|

| Text vs. Binary | text | text | text | metadata in JSON, data in binary | binary | text | binary |

| Data type | no | yes | no | yes | yes | yes | yes |

| Schema enforcement | no (minimal with header) | external for validation | external for validation | yes | yes | yes | yes |

| Schema evolution | no | yes | yes | yes | no | no | yes |

| Storage type | row | row | row | row | column | row | column |

| OLAP/OLTP | OLTP | OLTP | OLTP | OLTP | OLAP | OLTP | OLAP |

| Splitable | yes, in its simplest form | yes, with JSON lines | no | yes | yes | no | yes |

Conclusion

In consideration of the above factors, a Parquet file format data preservation platform is superior to all other formats. It allows you to manage data effectively and efficiently in a secure and optimized fashion with the following benefits:

- Storage optimization and reduced cost

- Superior processing and query speed

- Analytical efficiencies

- Better human experience through efficient metadata

- Unlimited scale

Board of Director, Chief Architect/Product Owner

Passionate about automating and solving complex problems with data for Fortune 500 clients. He has developed leading edge technology solutions to address the archival, decommissioning and migrating of legacy systems. Under his leadership, he has developed the Archon line of products and solutions to help clients in their open-source and digital transformation journeys.