With the tide turning on how companies manage data, many are now eyeing alternatives to Informatica Data Archive. It’s been a steady hand for years, keeping data safe and sound.

Informatica Data Archive has been the go-to solution for businesses, handling everything from storing old data to keeping rules in check. When it comes to tough nuts like GDPR or HIPAA, it’s been on the ball. It even comes loaded with built-in connectors for heavyweights like SAP, Oracle, and other major ERP systems. That’s part of why it has been the go-to for businesses managing data from cradle to grave.

But here is the catch. Is it still keeping up? Today’s data landscape moves fast. Cloud migration is no longer optional. Real-time insights are mission critical. And with regulations shifting constantly, staying compliant is a moving target.

The big question is this, can an old on-premises Informatica data archive setup really keep pace with the breakneck speed and scale of today’s data demands? Or is it time to turn the page and look at something cloud-native, more flexible, high-performing, and easier on the wallet?

Keep reading to find out about smarter alternatives that can help you steer clear of the dreaded moment your boss walks in and says, did you figure this out yet?

Why Organizations Initially Chose Informatica Data Archive

Back when businesses were just beginning to drown in data and batch jobs took their sweet time, Informatica Data Archive hit the sweet spot. It handled the mess with ease, making archiving feel less like a chore and more like flipping a switch.

To begin with, it simplified the process of archiving and managing data. No more manual work shifting outdated data to low-cost storage. It automatically kept only the critical, active data in high-performance systems, clearing up space and reducing costs.

Policy-Driven Automation stood out for making compliance a walk in the park. Instead of relying on manual checks that often slip through the cracks, it used a central policy engine to keep everything in order.

- Made GDPR compliance and audit record-keeping easy without technical skills.

- Ensured compliance with HIPAA, SOX, and GDPR.

- Provided audit trails, secure data deletion, and tamper-proof logs.

- Integrated seamlessly with SAP, Oracle, and other ERP systems.

- Enabled faster setup, fewer errors, and less risk.

- Helped companies keep data secure, organized, and compliant.

Informatica Data Archive with agility and real-time analytics still on the horizon, it helped companies keep their data organized, secure, and compliant, becoming a trusted tool for enterprise data governance.

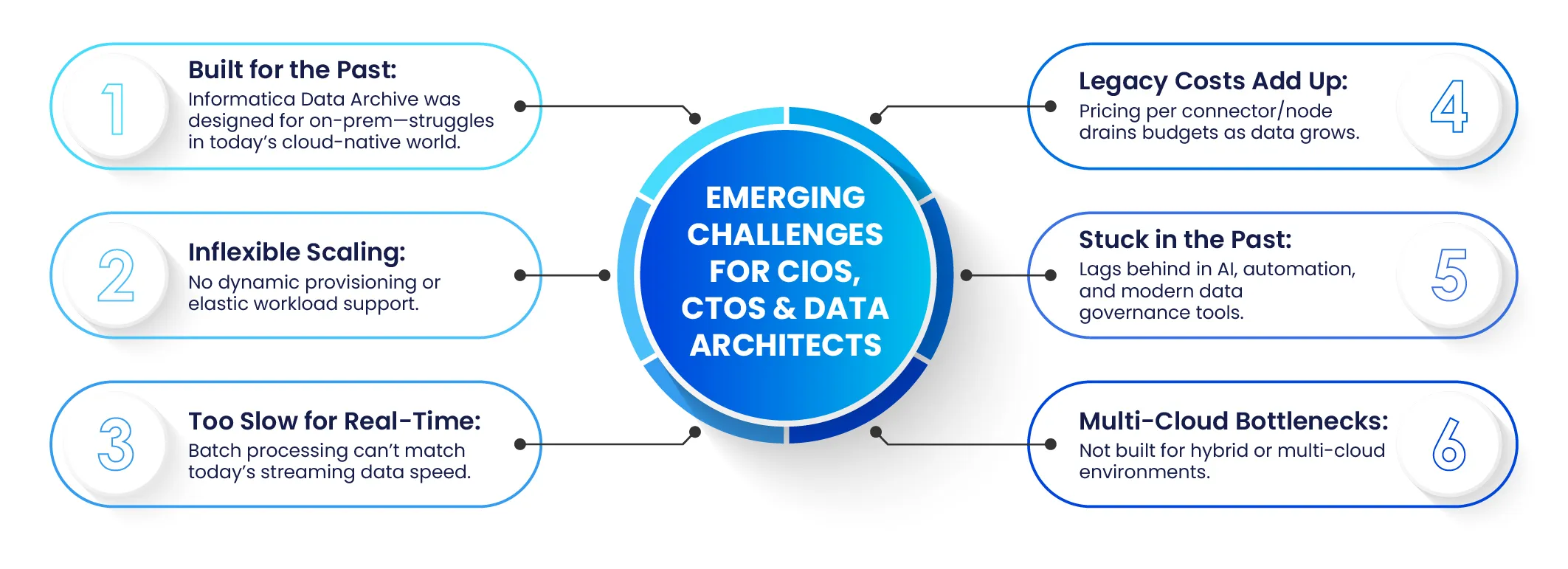

What are Emerging Challenges for CIOs, CTOs, and Data Architects?

Today, the goalposts have shifted for CIOs, CTOs, and data architects. While Informatica Data Archive still does the job at its core, the cracks are starting to show. Let’s unpack what that really means.

- Old Setup, New Expectations: Informatica Data Archive was built for a time when everything ran on on-premises systems. But times have changed. Businesses now lean toward cloud-native solutions that offer room to grow and shift as needed. Teams want the freedom to scale up or down without being boxed in by rigid systems. That is where Informatica starts to show its age. It lacks the flexibility to handle things like dynamic provisioning or elastic workloads.

- Speed is the New Standard: Today’s data world moves fast. We are talking petabytes flowing through streaming platforms like Kafka and powering real-time insights with tools like Snowflake. Batch processing might have worked years ago, but it cannot keep up anymore. Real-time data is no longer a luxury. It is the baseline. And, it just doesn’t match the speed and scale needed now. It requires a lot of workarounds to work with today’s big data needs.

- The Cost of Keeping It Going: Legacy pricing models in Informatica data archive, like paying per connector or node, don’t work well for organizations. As data grows, so do the costs. And that’s not even accounting for the ongoing problems with patching, upgrading hardware, and vendor support that agonize over time and the team’s budget.

- Innovation is Moving Faster Than Informatica Data Archive: AI-driven classification, automated data discovery, and policy-as-code tools are quickly becoming the new standard. It has a slower pace of updates, which leaves businesses scrambling to keep up with new data needs.

- Struggling with Multi-Cloud: More companies use multiple cloud services like AWS, Azure, GCP, and on-prem data systems. Its structure wasn’t built for these kinds of environments, and it’s often creating bottlenecks as it plays well with this architecture.

Because of these limitations, many organizations wonder: Is it still an asset, or has it become a roadblock slowing growth?

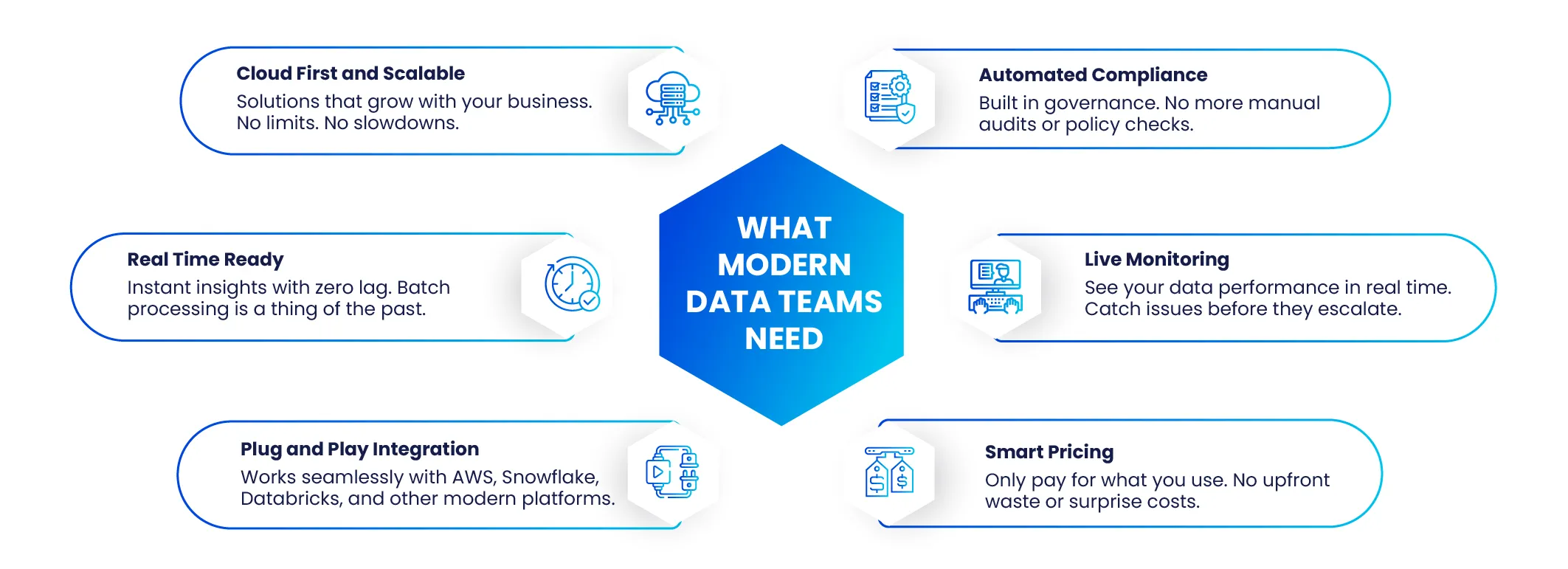

What Today’s Enterprises Need

Data isn’t what it used to be; businesses need tools to keep up. What worked before just doesn’t cut it anymore. The world is moving faster, and the tools companies use must also move faster.

So, what exactly do modern businesses need?

- Cloud-First, Scalable Solutions: No more old, outdated systems that can’t keep up with the cloud. Businesses need tools that grow with them, that can scale when they need it, and that can handle whatever data gets thrown at them. It’s all about ensuring data management doesn’t slow you down or cost you an arm and a leg when things ramp up.

- Real-Time Data: If you’re still waiting for batch jobs to process your data, you’re already behind. Real-time is the name of the game now. Businesses need tools that process data as soon as it’s created. No waiting, no delays. You need the insights right when needed, not after the facts.

- Simple Integration with Everything: Modern businesses don’t rely on just one platform; they use AWS, Snowflake, Databricks, and whatever else is out there. If your data solution can’t integrate easily with these tools, it’s time to find something that can. Companies are tired of dealing with long integration projects that feel like they’ll never end. Get it to work and get it to work fast.

- Automated Governance & Compliance: Compliance is a headache. But it doesn’t have to be. Today, businesses need tools that automate the boring stuff like compliance checks, audits, and policy updates. If it’s not automated and integrated into your workflow, you’re just asking for problems down the line.

- Real-Time Monitoring: You can’t manage what you can’t see. You’re playing with fire if you don’t have dashboards that show you exactly how your data’s doing in real-time. You need to catch issues before they become a problem and stop them.

- Cost-Effective Pricing: Let’s face it; data is expensive. But it doesn’t have to be. No one wants to pay for storage and processing they’re not using. The old pricing models, where you pay for everything upfront, don’t work anymore. Pay as you go. Simple as that.

If businesses are serious about staying competitive, they need data management solutions that work in the real world. The ones that give you what you need, when you need it, without all the fluff. It’s time to stop using tools that drag you down and start using ones that push you forward.

| Feature | Informatica Data Archive | Modern Alternatives |

|---|---|---|

| Architecture | Monolithic, on-prem-centric | Serverless / containerized; fully managed services |

| Data Processing | Batch-oriented | Native streaming, micro-batches, event-driven |

| Integrations | ERP-focused, custom adapters | 200+ cloud connectors, open APIs, and SDKs |

| Governance as Code | GUI-first policy engine | GitOps workflows, policy linting, and automated tests |

| Monitoring & Observability | Limited dashboards | Native integration with DataDog, Prometheus, and Grafana |

| Pricing Model | License + Maintenance | Pay-as-you-go, tiered based on consumption |

Top 7 Modern Informatica Data Archive Alternatives at a Glance

Enterprises can explore these seven modern Informatica Data Archive platforms at a glance:

- Archon Data Store

- IBM InfoSphere Optim Archive

- OpenText Info Archive

- Amazon S3 Glacier

- Komprise Transparent Move Technology

- Solix Enterprise Data Management Suite

- SAP Information Lifecycle Management (ILM)

Archon Data Store

Archon Data Store (ADS) is a modern, web-native data management platform designed as a unified archive and analytics repository. Built on open-source foundations, it seamlessly handles structured, semi-structured, and unstructured data at a petabyte scale.

It comes not only with ETL, but a suit that helps you with data governance.

Key Features:

- Unified Archive & Analytics: ADS integrates archival storage with built-in analytics, enabling long-term data retention without exporting to separate systems.

- Scalable Architecture: As a cloud-native service and a self-hosted platform, ADS decouples compute from storage to scale to petabytes and beyond.

- Compliance & Governance: A robust compliance engine delivers one compliance approval workflows, chain-of-custody, referential integrity, time- and event-based retention, eDiscovery support, and legal holds, all with full audit trails.

- Metadata-Driven Management: Centralized metadata catalog enables full-text search, automated classification, and policy-driven retention and deletion workflows.

- Tiered Storage & Cost Control: Intelligent tiering automatically moves colder data to cost-effective object stores (Amazon S3, Azure Blob, Google Cloud Storage) while keeping hot data on high-performance tiers.

Why can you choose this?

- Unified platforms eliminate tool sprawl and reduce integration overhead.

- Cloud-native and on-premises deployment flexibility meets diverse IT strategies.

- Advanced security features (WORM, immutability, encryption) ensure regulatory compliance.

- Built-in analytics accelerate insight generation without needing separate BI tools.

- Petabyte-scale performance supports massive datasets without compromising speed or searchability.

- Seamless integration with cloud ecosystems (AWS, Azure, GCP).

- End-to-end data governance ensures integrity, auditability, and defensibility across its lifecycle.

- AI-ready architecture with support for machine learning model integration on archived data.

- Cost optimization through intelligent tiering and compression without sacrificing accessibility.

- Real-time and historical data access within a single environment for operational and analytical workloads.

- Automated compliance enforcement reduces manual overhead and risk of regulatory breaches.

- Disaster recovery and business continuity features are built at the storage and metadata levels.

Ideal Use Cases:

- Regulated financial services, healthcare, government, and energy enterprises require strict compliance and auditability.

- Large-scale migrations of legacy archives (on tapes, NAS, old platforms) into a single, searchable repository.

- IoT and event-driven environments need real-time and historical data convergence in a single system.

- Organizations with multi-cloud strategies need flexible, hybrid deployments across private and public clouds.

- Analytics-driven businesses that want to extract insights from archived data without complex ETL pipelines.

- Enterprises are undergoing digital transformation and need a modern replacement for outdated archival systems.

- Legal and compliance teams need fast, defensible access for eDiscovery, audits, and investigations.

- R&D and data science teams requiring large datasets to train AI/ML models directly from archive storage.

- Media and entertainment companies manage massive unstructured datasets (videos, images, documents) with secure, cost-effective storage.

- Global organizations need region-specific compliance (GDPR, HIPAA, CCPA) and local data residency options.

IBM InfoSphere Optim Archive

IBM InfoSphere Optim Archive is a scalable, enterprise‑grade solution for structured data archiving throughout its lifecycle.

Key Features:

- Policy‑based data movement to cheaper storage tiers while preserving application context.

- Integration with IBM Db2, Oracle, SQL Server, and mainframes for live application archiving.

- Automated retention management with audit‑ready compliance reporting.

Why Choose It?

- Proven at scale in mission‑critical environments with hundreds of terabytes archived.

- Minimizes risk by embedding governance into the archiving workflow.

Ideal Use Cases:

- Financial services and healthcare organizations are facing strict retention mandates.

- Enterprises are seeking to offload historical data without disrupting live applications.

OpenText Info Archive

OpenText Info Archive delivers a unified archiving repository for structured and unstructured content, blending compliance, search, and analytics.

Key Features:

- Centralized archive supporting documents, email, database extracts, and webpages.

- Role‑based access and retention scheduling to meet SOX, GDPR, and HIPAA requirements.

- Full‑text search, reporting, and e‑discovery via an integrated UI.

Why Choose It?

- Mature product with tight integration into the OpenText content services platform.

- Simplifies audits by consolidating all records into a single, tamper‑proof store.

Ideal Use Cases:

- Large enterprises with heavy unstructured data and complex compliance landscapes.

- Organizations requiring advanced e‑discovery and legal hold capabilities.

Amazon S3 Glacier

Overview:

Amazon S3 Glacier provides low‑cost, highly durable cloud archive storage with multiple retrieval tiers for diverse SLAs.

Key Features:

- Three archive classes (Instant Retrieval, Flexible Retrieval, Deep Archive) for cost‑performance trade‑offs.

- 11‑nine durability via multi‑AZ replication and integration with S3 Object Lock for immutability.

- Pay‑as‑you‑go pricing with no minimum commitments.

Why Choose It?

- Virtually unlimited scale at roughly $1 per TB monthly for Deep Archive.

- Seamless integration with AWS analytics, backup, and compliance services.

Ideal Use Cases:

- Organizations that are migrating from tape to cloud for DR and long‑term retention.

- Enterprises need to retain extensive archives for years at a minimal cost.

Komprise Transparent Move Technology

Overview:

Komprise offers an analytics‑driven data management platform with Transparent Move Technology (TMT) that tiers and archives data without stubs or agents.

Key Features:

- Policy‑based tiering and archiving with native file access via dynamic links.

- Deep analytics to identify cold data, predict costs, and model ROI before migration.

- Multi‑vendor and multi‑cloud integration with no disruption to users.

Why Choose It?

- Non‑intrusive deployment that preserves existing workflows and paths.

- Balances cost savings with direct data access for analytics and ransomware recovery.

Ideal Use Cases:

- Enterprises with large NAS or file‑share estates seeking cost‑effective archiving.

- Organizations require hybrid cloud tiering with minimal user impact.

Cohesity SmartFiles

Cohesity SmartFiles is a modern, software-defined platform that blends file and object archival with intelligent data management. Designed for enterprise-scale workloads, it simplifies long-term retention while delivering built-in security, search, and compliance.

Key Features:

- Policy-based data tiering that moves cold data to low-cost cloud or on-prem storage automatically.

- Multi-protocol support (NFS, SMB, S3) to serve structured and unstructured data across environments.

- Built-in immutability, ransomware protection, and access controls for secure data retention.

- Seamless hybrid and multi-cloud support for AWS, Azure, and GCP.

Why Choose It?

- Unifies archival, governance, and cyber-resilience on a single platform.

- Reduces costs through deduplication, compression, and intelligent storage tiering.

- Allows teams to access and analyze archived data without the need to restore or rehydrate it.

- Eliminates the complexity of legacy ILM tools and point solutions by centralizing operations.

Ideal Use Cases:

- Enterprises manage large volumes of unstructured data, including logs, user files, and media assets.

- Organizations are retiring legacy file servers or consolidating NAS infrastructure.

- Teams need fast access to archive data for compliance, investigation, or analytics.

- Businesses looking for ransomware-resilient storage for regulated or sensitive content

SAP Information Lifecycle Management (ILM)

Overview:

SAP ILM automates archiving, retention management, and legacy system decommissioning within the SAP Business Technology Platform.

Key Features:

- Rule‑based archiving that moves data to low‑cost stores while preserving business context.

- Automated legal retention and destruction workflows for GDPR, SOX, and more.

- System decommissioning that lets you retire old SAP or third‑party applications without losing access.

Why Choose It?

- Tight integration with SAP ERP, S/4HANA, and BW for seamless governance.

- Single solution for structured/unstructured ILM plus audit, e‑discovery, and reporting.

Ideal Use Cases:

- SAP-centric enterprises that are looking to reduce TCO and consolidate archives.

- Businesses need end‑to‑end retention control from creation to destruction.

Why Archon Data Store Excels

While several platforms offer modern Informatica Data Archive capabilities, ADS stands out for its:

- Unified Architecture: Unlike point solutions, ADS combines archiving, analytics, metadata management, and governance in one platform, reducing integration complexity and operational overhead.

- Scalable Performance: ADS decouples compute and storage at the petabyte scale, delivering consistent low-latency access for batch and real-time workloads.

- Comprehensive Compliance: With built-in legal holds, WORM storage, and detailed audit trails, ADS meets the strictest regulatory requirements, whereas other tools often require additional components or custom code.

- Cost Efficiency: Intelligent tiering and capacity-based pricing allow organizations to optimize costs across hot, warm, and cold data, avoiding overprovisioning inherent in tools with fixed cluster models.

By consolidating multiple Informatica Data Archive functions into a single, extensible platform, ADS simplifies the data lifecycle and accelerates time-to-value, making it the superior choice for enterprises seeking agility, compliance, and performance.

Strategic Shift

Data is both an asset and a liability. Legacy data archive products are proven, but they can slow down innovation. They may also raise costs and hide the insights they were designed to safeguard. The strategic imperative is clear: modernize your data lifecycle management.

Organizations that adopt cloud-native data archive options aren’t just saving money, they’re gaining:

- New Agility: Scale pipelines on demand, spin up environments in minutes, and iterate on data products at cloud speed.

- Easier Compliance: Handle data as code. Enforce policies automatically. Show audit readiness with one click.

- Future-Ready Infrastructure: Build a system that efficiently supports new data sources like IoT and machine learning models without costly redesigns.

The transition might seem harsh, but using a phased approach and modern platforms helps. You can keep essential capabilities while speeding up time-to-value. Improving data archival is critical in ever-evolving requirements. It’s a must for your organization to stay ahead or risk falling behind.