By Andrew Marsh, Mike Teachworth and Tom Rieger

Whether you look at Amazon Web Services (AWS), Microsoft Azure, or Google Cloud Service (GCP),there is one constant. They want you to use not just their platform, but their other technologies. GCP has a catalog of 400 products and AWS has 11 different ‘database’ offerings—and that is before you crack open the AWS Marketplace with additional options from third parties that have gone through the ‘Amazon rigger’ to get listed. It is a land-grab because the cloud vendors know once you move a major workload to them they lock you in for the long hall – financially called ‘annual renewable revenues’ (ARR). The holy grail in defining success in high tech today.

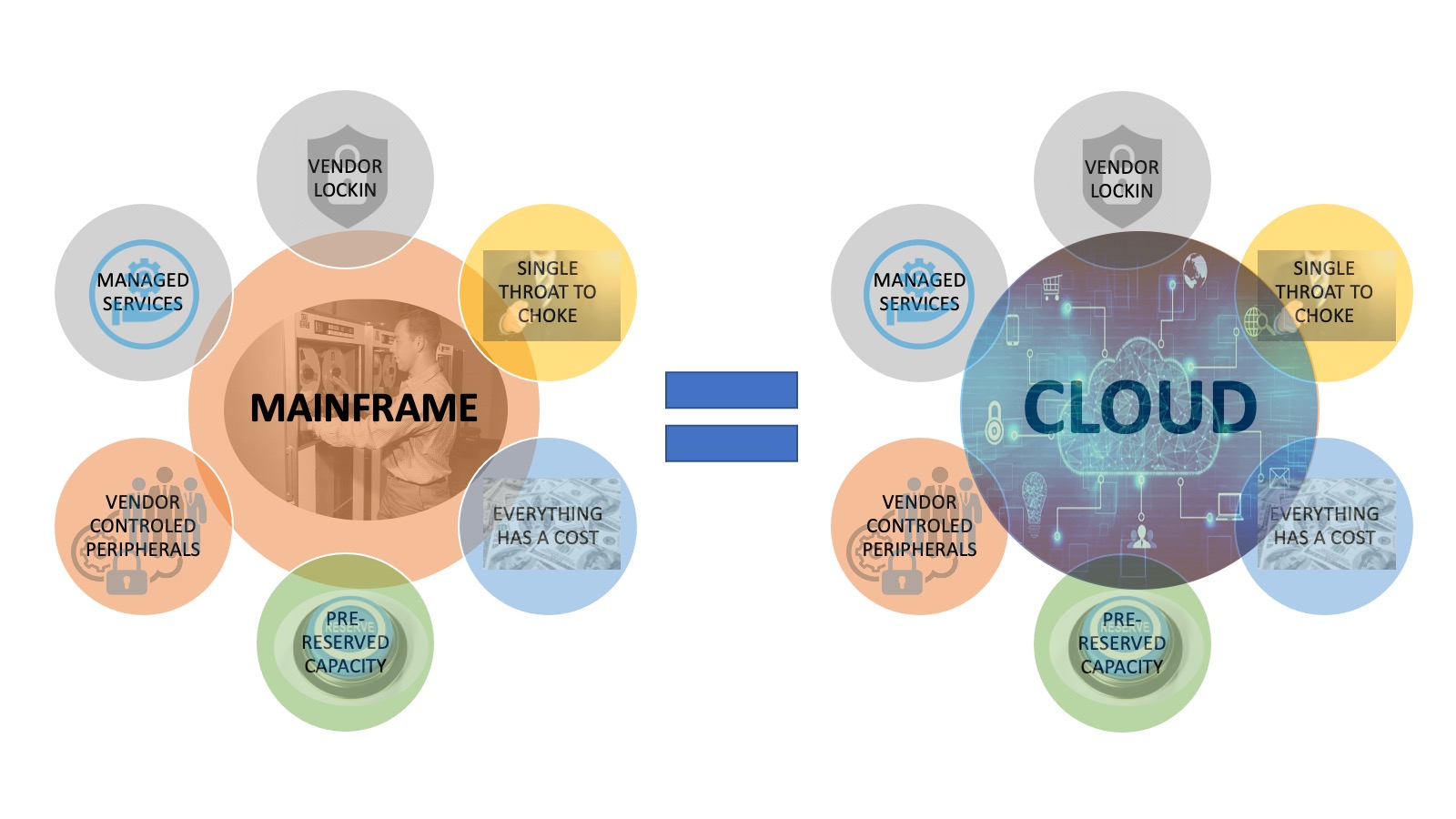

- VENDOR LOCKIN: You were only as empowered as IBM’s research and development. 80-90% of what ran on the mainframe came from IBM.

- SINGLE THROAT-TO-CHOKE: If something did happen to the mainframe, you had one vendor to call.

- EVERYTHING HAD A COST: The mainframe was priced by the instruction – ‘MIPS’ was the phrase of the day. ‘Millions of Instructions per Second’ and every company would code, adopt and measure software based on how efficiently it used every instruction. Same for disk usage, client equipment and even printing.

- PRE-RESERVED CAPACITY: When an organization purchased that mainframe, they essentially pre-reserved a set capacity up front – specifically performance with MIPS.

- ACCESSORIES AND PERIPHERALS: The add-ons to run that mainframe were numerous and further relegated the customer to buy everything from IBM. Hardware like printers and disk drives, consumables like toner and software like security, database (IMS and DB2), compilers and beyond.

- MANAGED SERVICES: Because of the complexities within the IBM world, many organizations just had IBM run all or parts of the operations to keep them running effectively (or as effective as the contract states).

Fast forward to today, and the cloud providers are trying to be your next MAINFRAME – or shall we call it CLOUDFRAME. Let’s compare:

- VENDOR LOCKIN: Once you are using their platform as a ‘systems or record’ with their database offerings or ‘marketplace’ technologies to drive more platform decisions, the act to get off their cloud is just as painful as migrating off anything on-premise.

- SINGLE THROAT-TO-CHOKE: They all have standard pricing for ‘enterprise support’ for their entire catalog of services and solutions. Rather this support is of any quality does not matter – it is that one phone call or web portal interaction.

- EVERYTHING HAS A COST: All clouds bring a degree of complexity (and perceived transparency) to their pricing. AWS has 44 different 8-cpu virtual machines to choose from involving a different mix of processor vendors and quality, memory, network performance, and storage throughput. But everything has a cost. Consider the obscurity that comes with AWS Aurora database pricing. The biggest cost factor is the storage based on actual inputs/outputs with the storage tier in a one month window – something a client has no control over, can tune and the definition of the unit ‘IO’ is still very obscure once you consister the database layer and code.

- PRE-RESERVED CAPACITY: All clouds offer a transparent CPU/RAM pricing model that also allows organizations to get discounts for 1 or 3 years of a commitment. But the simplicity stops there. Once you start to add other necessary components, the TCO bloats dramatically. AWS and Azure have a very obtuse costing model for their higher end storage where ‘input/outputs per second’ and/or ‘megabytes per second throughput’ are something that you pre-reserve, pay for based on actual usage BUT never are sure if you consume it. EDB has found storage subsystems can be provisioned at a level of performance that will never be realized and bloat the overall costs by 400% to 500%. Same goes for monitoring tools, high availability and more.

- ACCESSORIES AND PERIPHERALS: Unlike the mainframe where this refers to physical components and consumables, in the cloud this quickly applies to incremental capabilities like network consumption in and out, migration tools, monitoring (dare anyone to reliably predict what AWS Cloud Watch will cost on a single enterprise database workload), and more. All of the training and certifications they offer in education further drive this incremental consumption and obfuscate alternatives that have no or little cost.

- MANAGED SERVICES: Now it is called ‘as a service’. The attempt to simplify the provisioning and maintaining of the layers of enterprise systems. To sell how their ‘as a service’ allows a company to have less IT people because the make it ‘simple’. In reality, these ‘as a services’ further the facade to drive more compute, storage and software from that one vendor (or possibly within their Marketplace). In many instances, they use older VMs and storage to wire together a ‘cloud service’. For instance, Azure’s Database as a service for Postgres is built on Intel processors from 2013, slow storage rated at 3 IOPS per gigabyte of consumption and on a version of Postgres (v11.6) that is 2 major versions behind (current is v13) and 5 minor versions back as it relates to security patching (latest is v11.11).

Like on-premise consumption of computing resources, organizations never just bought from one hardware vendor and maybe even different storage vendors. During this time of utilization of the cloud, a few simple facts persist

- DO NOT GET HYPNOTIZED BY THE PERCEPTION OF ‘…AS A SERVICE’. Like when you go into a large retail store, they have (1) store brands and (2) name brands. Depending on the item, each choice may have little quality difference but the store brand is cheaper. But other items have a big difference in quality.

- BECAUSE THEY MAKE IT SOUND ‘OPEN’, IT ISN’T. Prime example is the database Postgres. AWS Aurora is an old ‘fork’ away from community Postgres to create their solution to why even describe it as ‘Postgres compatible’. Using Azure as another reference, it can even be 3 major versions behind the current community which is of grave concern from a security and capabilities perspective.

- DO YOU HAVE ‘CONTROL’ IN THEIR ‘AS A SERVICE”?: The answer is NO. Looking at all 3 cloud options turn Postgres into a service, they somewhat hide how you can best tune the database to your workload. They all only let you change a subset of parameters and add others that make their system more proprietary. Community Postgres has ~350 tunable parameters and they all only let you change ~50% of them. You also do not have ‘superuser’ access to the machines thus limiting what you can execute against the database. Finally they all give you no control over how storage is setup beyond a single volume of space. In OLTP environments, you may want to separate your logs from your data and indexes, or in data warehousing use database partitioning to breakup a very large table across different volumes. None of that is possible.

Do not get hypnotized by the perceived simplicities they position themselves as being a ‘best practice’ from a technology or pricing perspective. EDB has found some of these services are 3x to 4x more expensive than they should be ‘defaults’ or ‘maximums’ are used. Review this WEBINAR for specific comparisons and discussions HERE. The SLAs for some of these ‘as a services’ are only 99.9% or at best 99.99%. Inconsistencies in performance and throughputs are abound. Platform 3 Solutions and EDB is ready to share what ‘good’ should look like in this transformation to the cloud.

About the Authors

Andrew Marsh – As the director of customer engineering for Platform 3 Solutions, Andrew is engaged with organizations everyday to help them realize a proper migration, archive and retirement strategy for data and applications. He brings over 25 years experience in enterprise computing previously working at Mobius, Dell/EMC and Box.

Mike Teachworth – Mike is the Director North American Customer Engineering team for EDB helping the largest global organizations move from commercial to open-source data management platforms. Before EDB, Mike was an executive at IBM, Informix and owner of his own consulting firms.

Tom Rieger – Bringing over 30 years of experience to data management, Tom works for EDB and helps scope and evaluate client opportunities in using open-source Postgres for top tier requirements. He previously worked for OpenText, Dell/EMC, IBM, FileNet, Informix and in IT leadership positions at Labatt and Textron.